Initialization

Start by initializing {touchstone} in your package repository with:

touchstone::use_touchstone()This will:

-

create a

touchstonedirectory in the repository root with:-

config.jsonthat defines how to run your benchmark. In particular, you can define abenchmarking_repo, that is, a repository you need to run your bench mark (use""if you don’t need an additional git repository checked out for your benchmark). This repository will be cloned intobenchmarking_path. For example to benchmark {styler}, we format the {here} package withstyle_pkg()that is not part of the {styler} repository, but with the below config, will be located attouchstone/sources/hereduring benchmarking. The code you want to benchmark comes from the benchmarked repository, which in our case is the root from where you calluse_touchstone()and hence does not need to be defined explicitly.

{ "benchmarking_repo": "lorenzwalthert/here", "benchmarking_ref": "ca9c8e69c727def88d8ba1c8b85b0e0bcea87b3f", "benchmarking_path": "touchstone/sources/here", "os": "ubuntu-18.04", "r": "4.0.0", "rspm": "https://packagemanager.rstudio.com/all/__linux__/bionic/291" }-

script.R, the script that runs the benchmark, also called thetouchstone_script. -

header.R, the script containing the default PR comment header, see?touchstone::pr_comment. -

footer.R, the script containing the default PR comment footer, see?touchstone::pr_comment.

-

add the

touchstonedirectory to.Rbuildignore.write the workflow files1 you need to invoke touchstone in new pull requests into

.github/workflows/.

Now you just need to modify the touchstone script

touchstone/script.R to run the benchmarks you are

interested in.

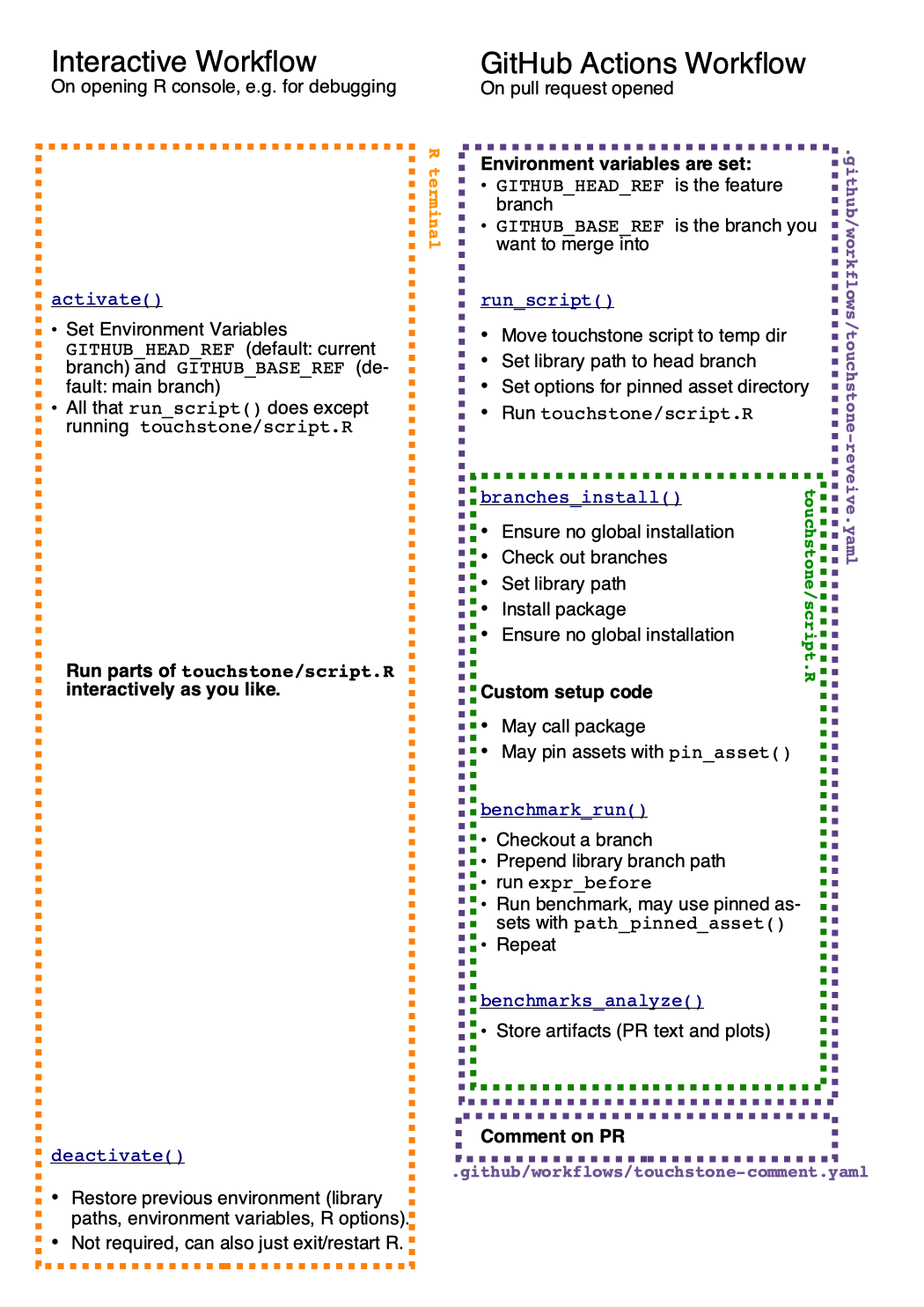

Workflow overview

While you eventually want to run your benchmarks on GitHub Actions for every pull request, it is handy to develop interactively and locally first to avoid long feedback cycles with trivial errors. The below diagram shows these two different workflows and what they entail.

Note that there are two GitHub Action workflow files in

.github/workflows/ due to security

reasons.

In the remainder, we’ll explain how to adapt the touchstone script, which is the most important script you need to customize.

Adapting the touchstone script

The file consists of three parts, two of which you will need to

modify to fit your needs. Within the GitHub Action {touchstone} will

always run the touchstone/script.R from the

HEAD branch, so you can modify or extend the benchmarks if

needed to fit your current branch. Please beware that a few functions

such as branches_install(), pin_assets or

benchmark_run() will perform local git checkouts and

therefore the git status should be clean and no other processes should

run in the directory.

The touchstone script will be run with run_script() on

GitHub Actions with the required environment variables

GITHUB_HEAD_REF and GITHUB_BASE_REF set for

various reasons explained in the help file. If you want to interactively

work on your touchstone script, i.e. running it line by line, you must

activate touchstone first with activate(), which sets

environment variables, R options and library paths.

Setup

We first install the different package versions in separate libraries

with branches_install(), this is mandatory for any

script.R. If you want to access some directory or file

which only exists on one of the branches you can use

pin_assets(..., ref = "your-branch-name") to make them

available across branches. You can of course also run arbitrary code to

prepare for the benchmarks.

touchstone::branches_install() # installs branches to benchmark

touchstone::pin_assets("data/all.Rdata", "inst/scripts") # pin files and directories

load(path_pinned_asset("all.Rdata")) # perform other setup you need for the benchmarks

source(path_pinned_asset("scripts/prepare.R"))

data_clean <- prepare_data(data_all)Benchmarks

Run any number of benchmarks, with one named benchmark per call of

benchmark_run(). As benchmarks are run in a subprocess,

setup (like setting a seed) needs to be passed with

expr_before_benchmark. You can pass a single function call

or a longer block of code wrapped in “{ }”. The expressions are captured

with rlang so you can use quasiquotation.

# benchmark a function call from your package

touchstone::benchmark_run(

random_test = yourpkg::fun(data_clean),

n = 2

)

# Optionally run setup code

touchstone::benchmark_run(

expr_before_benchmark = {

library(yourpkg)

set.seed(42)

},

test_with_seed = fun(data_clean),

n = 2

)Running the script

After you have committed and pushed the workflow files to your default branch the benchmarks will be run on new pull requests and on each commit while that pull request is open.

Note that various functions such as branches_install()

pin_assets() and benchmark_run() will check

out different branches and should therefore be the only process running

in the directory and with a clean git status.

The Results

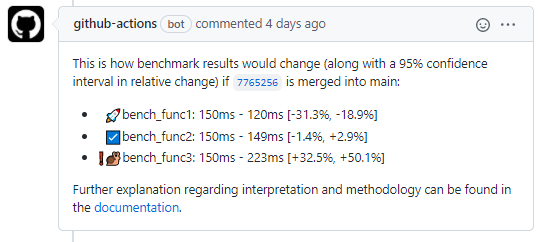

When the GitHub Action successfully completes, the main result you will see is the comment posted on the pull request, it will look something like this:

You can change the header and footer, see

?touchstone::pr_comment. The main body is the list of

benchmarks and their results. First the mean elapsed time of all

iterations of that benchmark (BASE

HEAD) and a 95% confidence interval.

To make it easier to spot benchmarks that need closer attention, we

prefix the benchmarks with emojis indicating if there was a

statistically significant change (see inference)

in the timing or not. In this example the HEAD version of

func1 is significantly faster, func3 is slower

than BASE while there is no significant change for

func2.

The GitHub Action will also upload this text and plots of the timings as artifacts.

Note that these files must be committed to the default branch before {touchstone} continuous benchmarking will be triggered for new PRs.↩︎